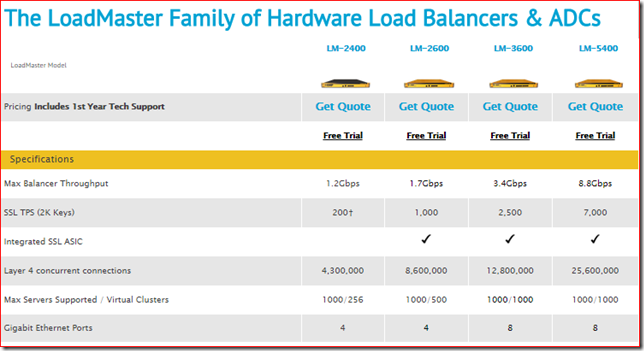

The KEMP Technologies LoadMaster range of load balancers goes from very affordable entry level models up to the real work horses. To choose the right option you need to think about the number of network interfaces you need, how many real or virtual servers you want to be able to use and estimate your expected throughput and SSL TPS.

Of all those parameters SSL TPS is the one that confuses some people.

What is SSL TPS?

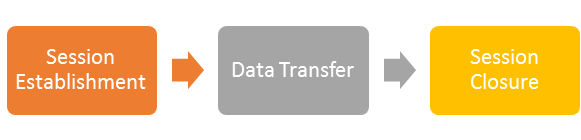

SSL TPS is the number of SSL (Secure Sockets Layer) Transactions per Second. First we need to understand what a Transaction is. A SSL transaction consists of three phases:

The Session Establishment phase is the most expensive from a performance point-of-view. This is where the authentication and handshake, key exchange takes place and the encrypted sessions basically is created. The Data Transfer phase is where the actual data is being transferred and during the Session Closure phase the client and server tear down the connection.

So TPS is the number of new SSL sessions per second, not to be confused with concurrent (already established) SSL sessions.

SSL and ADCs

Creating a SSL session requires CPU resources and our common x86 processors are not particularly good at this task. This is why certain ADCs have a dedicated CPU to perform this task, this is called an ASIC (Application Specific Integrated Circuit). The LoadMaster LM-2600, LM3600 and LM-5400 are examples of ADCs with an SSL ASIC. Traditionally an ADC with SSL ASIC was used to offload the SSL traffic and transfer the traffic over unencrypted HTTP to the real server.

Today SSL offloading enables the ADC to perform L7 task such as content switching and Intrusion Prevention Detection (IPS). And with the power of modern hardware it's common practice to even re-encrypt the traffic again before it leaves the ADS to the real server.

Calculate the TPS

To calculate the expected SSL TPS you need to understand both the traffic characteristics of your application as well as the expected load the users will cause.

For a typical HTTP application you need to understand:

- the number of unique visitors

- the number of HTML pages loaded per user session

- the number of requests made to the web-server per HTML page

Plan for peak usage, burst load can be up to three or four times the average load.

Measure the TPS

A more hands-on and practical way to determine SSL TPS may be to simply measure it from a production or lab deployment. If you don't have an existing solution in place to measure, I suggest you download a trial version of the KEMP LoadMaster VLM. The VLM comes with a 30 days temporary license which should be sufficient to perform some tests in your environment.

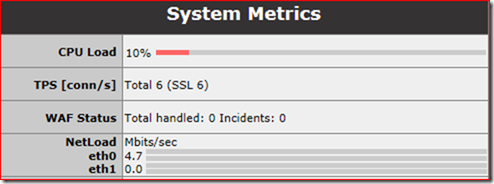

After you created the Virtual Service and directed users to the LoadMaster you can read the TPS and throughput in real-time in the System Metrics section of the Home page.

This screenshot is taken from a small Exchange 2013 environment with ~700 active users with Outlook Anywhere in Online Mode and an average of 1.5 ActiveSync device per user.

This customer plans to use the LoadMaster for several other applications in the near feature. The choice for the VLM-2000 with its 2 Gbps throughput and up to 1.000 SSL TPS seems to be the right one, this unit offers more than enough performance with sufficient headroom for peak usage.

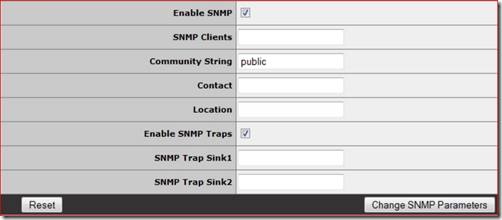

An alternative approach would be to enable SNMP on the LoadMaster:

The MIB can be located under the Tools section of the LoadMaster Documentation site. Then use your favorite SNMP tool to collect and log the data, for instance Peassler's PRTG.